Reality versus "Demo Magic"

If you’ve spent any time browsing AI development content, you’ve probably seen the flashy headlines: “I built an app in 30 minutes!” The novelty is hard to ignore. But peel back the layers, and you’ll often find a different story beneath the surface—authentication systems held together with duct tape, zero data persistence, nonexistent error handling, and security practices that wouldn’t pass even the most forgiving audits.

At Modern Product, we took a different path with Mission Control. Instead of shooting for quick, impressive demos, we designed the project with one thing in mind—production-grade readiness. The goal wasn’t to prove that an app could simply be built using generative AI; it was to prove whether it could meet the rigorous standards required of real-world, scalable software. Surprisingly, these constraints didn’t act as roadblocks—they became accelerators that guided smarter development and more efficient AI usage.

Let’s start with my background and experience because, ultimately, it dictated how far I was able to most effectively leverage (productivity multiplier) versus blind trust (meager gain) in the LLMs.

Who is Modern Product?

I’ve spent 24 years in the tech industry, starting out as a Software Engineer before transitioning into management roles and eventually finding my home in Product Management. For over two decades, I’ve focused on Product Management and Product Leadership, working closely with Engineering and Design teams across the entire product development lifecycle. After spending 7+ years in senior and executive management positions, I founded Modern Product Consulting six years ago to share my expertise at scale.

Here’s how my background connects to the “Mission Control Challenge.” As a Technology Leader, I’m comfortable discussing complex engineering topics just as easily as I can tackle strategic business conversations with executives. First and foremost, I consider myself an expert in Product Management and Product Strategy. I’d call myself an intermediate full-stack Software Engineer and a solid QA practitioner, but I have no real skills in infrastructure or DevOps. I’ve worked alongside some incredible UX Design professionals, but I’m not a designer myself.

When I took on this Mission Control challenge, I knew there would be areas where I could lean on my own expertise and others where I’d need significant support from LLMs to build a production-ready application from scratch. That’s the key—our insights here are driven by where my background allowed me to amplify productivity and where I had to allow and trust the LLM to step in to fill the gaps.

So...What is Mission Control Exactly?

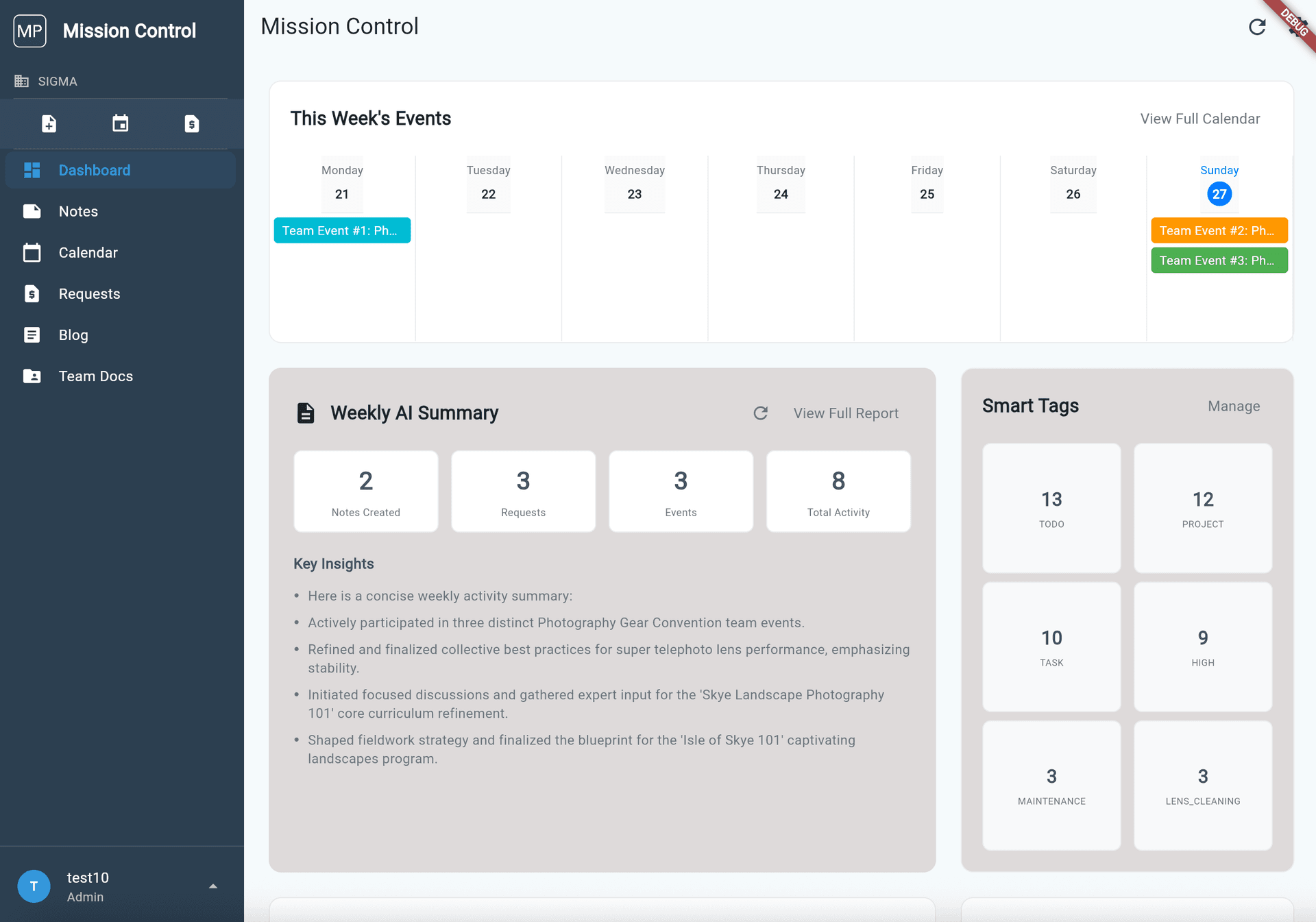

Imagine your entire team’s activity—notes, events, requests—flowing through a single, beautifully organized workspace. Instantly see what matters: smart tags, AI-surfaced insights, a real-time pulse on who’s doing what, and weekly digests that zero in on actions, decisions, and blockers. This isn’t just collaboration; it’s your team running at its sharpest, with the chaos streamlined and the signals amplified.

(ChatPRD-generated Product Marketing messaging)

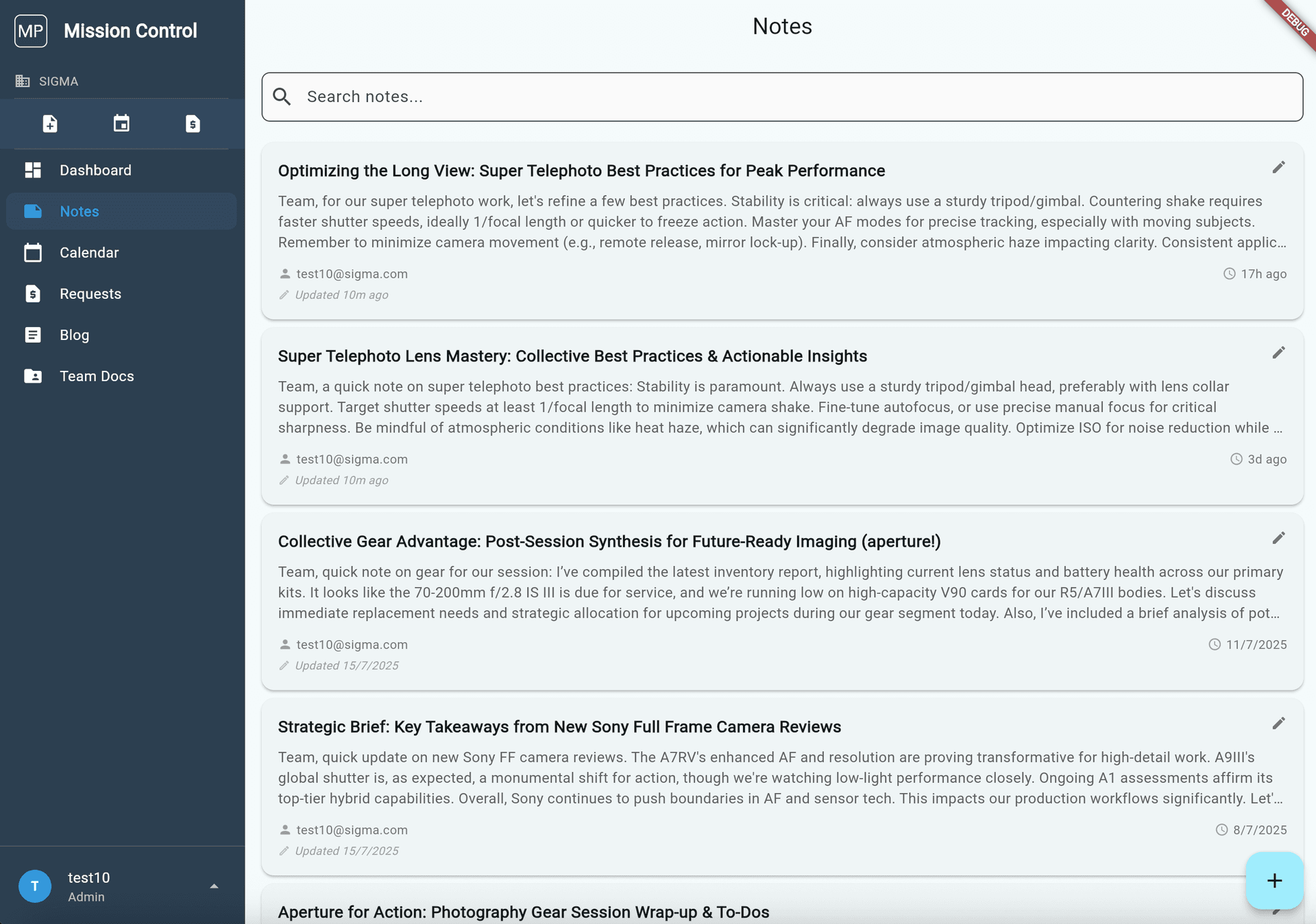

Mission Control is a B2B SaaS application that supports multiple customers with multiple users, all in hypothetical team-collaboration scenarios. It showcases collaboration features like shared notes, requests management, events management, team documentation, and public blogs managed across a team. We wanted to demonstrate how AI/LLM tech can boost the Product Experience as well, so in the Mission Control app, we built AI-generated "Weekly Summary," personalized "Action Items" or "Notifications" to individual users, and automated AI-based tagging across all content that feeds into search and team-based productivity stats/reporting.

(NOTE: Mission Control is a production-ready application that we aren't going to take public. It’s solely built to showcase the transformative possibilities of AI and large language models (LLMs) in modern product workflows, and we hope to serve as a foundation tech stack for founders who wish to springboard into their new venture product development. We wanted to highlight how AI/LLMs can boost productivity and speed up time-to-market, but do so in a real-world production environment.)

The Production Realities That Guided Mission Control

When building a business-critical application, flashy UI elements will never outweigh the need for robust functionality and reliability. Mission Control wasn’t going to be another toy project. It had to solve the same challenges your team faces when scaling up from idea to execution. Here are the key production requirements that defined the project.

Performance, Reliability, & Scalability

Performance, reliability, and scalability are crucial for building successful products. Prioritizing these early ensures systems run efficiently, handle growth, and stay dependable over time—giving companies a strong foundation for sustainable success in a competitive market.

Flexibility & Extensibility

A flexible tech stack is key for any forward-thinking organization. As needs and strategies evolve, choosing adaptable tools ensures long-term success. By selecting a tech stack that supports growth and change, businesses can innovate and stay competitive.

Universal, Expected Capabilities

Foundational features are key to aligning a product with its strategy and meeting user expectations. In B2B SaaS, app architecture must support multiple customers, ensure data security, and include essentials like user accounts, login flows, and role-based access. These "table stakes" must be perfected before adding advanced features to deliver a reliable product.

Real-World Development Workflows

Efficient development pipelines are key for scalable, collaborative production teams. Source control tracks changes and streamlines teamwork, while build scripts automate deployments. Clear configuration files maintain consistency, and thorough README documentation helps team members navigate projects easily. Containerization ensures reliable, environment-specific workflows, reducing “it works on my machine” issues. Together, these practices enable sustainable software development.

These aren’t just hypotheticals—they’re the foundations for how Mission Control was designed, built, and deployed.

How Constraints Accelerated Development

1. Rigorous Planning Led to Clearer Specifications and Better Outputs

Modern product development relies on smart planning and clear specifications while executing as lean and efficiently as possible. Applying this rigor to AI-assisted workflows paid off significantly. Well-defined requirements gave LLMs clear direction, avoiding vague or subpar outputs. For instance, specifying detailed user stories and edge cases allowed the models to generate more focused, high-quality code and content.

Measurable Benefit: Reduced rework significantly (ballpark 5–10x) compared to using generic prompts, speeding up delivery timelines.

2. Leveraging a Modern Tech Stack Boosted LLM Effectiveness

By using well-supported, modern tech stack components, the LLMs had access to a larger and more reliable base of training data and expertise. Familiar stacks like Flutter, GCP/Firebase, Docker, and well-supported languages like Dart and Python were within the models' knowledge base, which enhanced the quality of their solutions. This alignment reduced gaps in generated outputs and improved compatibility with real-world standards.

Measurable Benefit: Increased engineering time exponentially (10–50x) and decreased troubleshooting time (at least 5–20x), with AI generating production-ready suggestions more often.

3. "Shootout" Testing Optimized LLM Selection for Each Task

Just like we would in real-world product development when evaluating new platforms or technologies, we took a "shootout" approach to testing various platforms/LLMs like Claude Sonnet, ChatGPT 4, Perplexity Pro, and CoPilot against each other for various tasks. By A/B testing their performance, we identified which model was best suited for specific needs, such as content generation, coding/debugging, DevOps, or architecture planning.

Measurable Benefit: Relative to when we started, our workflow efficiency improved 2x, as we matched tasks to the best-performing model, minimizing wasted effort and maximizing output quality.

Actionable Takeaways for Tech Leaders & Teams

Use your Team's Expertise to Guide AI

With 200 hours of deep exploration, one thing is clear: having experts "behind the wheel" is critical to truly unlocking LLM potential. Treat LLMs like a "gifted intern"—they need monitoring and guidance, but when pointed in the right direction, they can produce incredible results and exponentially boost productivity in specific areas.

AI Won’t Replace Expertise, But It’s Still Valuable

AI isn’t ready to replace skilled professionals, but even in areas where expertise is lacking, you can still achieve marginal productivity gains of 25–100%. While it’s a worthwhile tool to augment your team, it’s not a reason to eliminate your "doers." Skilled professionals remain essential for critical thinking, contextual decisions, and creative problem-solving.

Strategic Processes Drive Better Results

The effectiveness of LLMs for product development improves significantly with strong planning, clear requirements, and well-defined specifications. A strategic approach to prompting, learning which LLMs excel at specific tasks, and iterating along the way will maximize the benefits of integrating AI into your workflows.

By leveraging AI strategically and complementing it with human expertise, you can unlock precision and speed in your product development process—without compromising quality.

NEXT POST: We'll walk you through the early Product Strategy/Product Management work we did and what we learned in working with various AI platforms/LLMs.